Design of Network Monitoring System Based on Embedded Linux Video

Abstract: A network monitoring system based on embedded Linux video with Browser / Server structure, built-in embedded web server on video server is introduced. It focuses on the design of its server.

Keywords: Embedded Linux Web server MPEG-4 network communication

With the rapid development of computer technology and network technology, the development trend of the public security and security industry will inevitably be full digitalization and networking. The traditional analog CCTV monitoring system has many limitations: the transmission distance is limited, it cannot be networked, and the storage of analog video signal data will consume a large amount of storage media (such as video tapes), and it is very cumbersome to query and obtain evidence. The terminal function of the video monitoring system based on the personal computer is strong, but the stability is not good. The video front end (such as voltage coupling components and other video signal collection, compression, communication) is more complicated and the reliability is not high. The network monitoring system based on embedded Linux video does not require a personal computer for processing analog video signals. Instead, the video server is built into an embedded Web server, and an embedded real-time multitasking operating system is used. Because the video compression and Web functions are concentrated in a small device, you can directly connect to the LAN, plug and watch, save complex cables, easy to install (only need to set an IP address), users do not need to install any Hardware devices can be viewed with a browser only.

The embedded Linux-based video network monitoring system connects the embedded Linux system to the Web, that is, the video server has a built-in embedded Web server. After the video signal transmitted from the camera is digitized, it is compressed by a high-efficiency compression chip and transmitted to the built-in Web through the internal bus On the server.

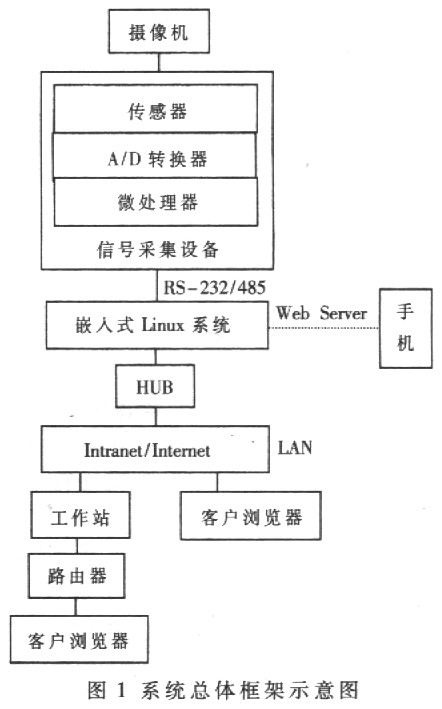

1 System overall framework Embedded Linux video network monitoring system is an organic combination of electrical and electronic devices, computer hardware and software, network, communication and other aspects. It is characterized by intelligence, network and interactivity, and its structure is relatively complex. If the content and form of the OSI seven-layer model are used, and the corresponding data acquisition and control module hardware and application software and application environment are organically combined, a unified overall system framework can be formed. The schematic diagram of the overall system framework is shown in Figure 1.

After the video signal from the camera is digitized, it is compressed, and the data is sent to the built-in Web server through RS-232 / RS485. The 10 / 100M Ethernet port of the embedded LJnux system realizes access to the Internet network and sends the live signal to the customer. end. The core of the entire system is the embedded Linux system. After the monitoring system is started, the embedded Linux system starts the Web Server service program and receives the request to authorize the client browser. The Web Server will complete the corresponding monitoring according to the communication protocol.

2 System implementation

2.1 Hardware platform design This system is based on the public embedded Linux source code, write the corresponding Bootloader program according to the designed embedded target board, and then cut out the appropriate kernel and file system. The target platform CPU adopts ColdFire embedded processor MFC5272 produced by Motorola. MFC5272 adopts ColdFire V2 variable-length RISC processor core and DigltalIDNA technology, which can reach the excellent processing capacity of 63Dlnrystone2.1MIPS under 66MHz clock. The internal SIM (System Integrated Module) unit integrates a wealth of general-purpose modules, and requires only a few peripheral chips to implement two RS-232 serial ports and a USB Slave interface. The MFC5272 also embeds a FEC (Fast Ethernet Controller) and expands an LXT97l off-chip, conveniently implementing a 100/10 BaseT Ethernet interface. It can be seamlessly connected with commonly used peripheral devices (such as SDRAM, ISDN transceivers), thereby simplifying the design of peripheral circuits and reducing product cost, volume and power consumption.

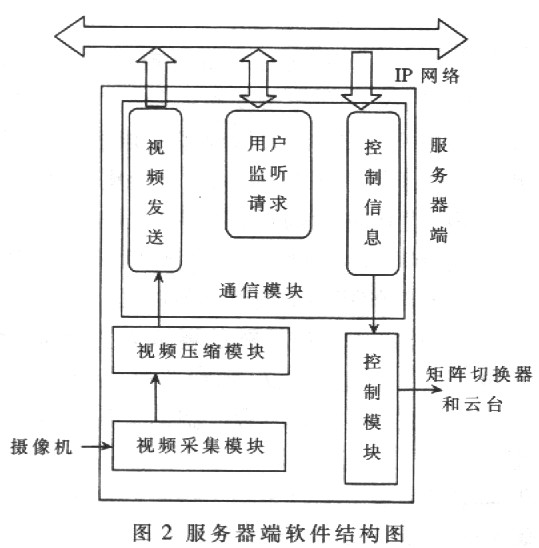

2.2 Software design and implementation The software structure of the video surveillance system uses a browser, server (B / S) network model, that is, the client makes a request to the server through the Web, and the server responds to the request and performs the corresponding task. (Such as sending the multicast address, image format, compressed format, etc. to the client), after the connection is established, the controlled point can be monitored on the client, thereby achieving remote network monitoring. The software structure of the server (Web Servei) side, that is, the on-site monitoring point, includes the acquisition module, compression encoding module, network communication module, and control module, as shown in Figure 2.

2.2.1 Design of video acquisition module Because the camera obtains an analog video signal, it cannot be used directly by a computer. Therefore, to transmit video on the Internet, it must be digitized first. The video capture card selected by this system is Conexant's Bt848 card. This card does not require any local cache to store video pixel data. It can also make full use of the high bandwidth and inherent multimedia functions based on the PCI bus system, and can communicate with other multimedia devices. Achieve interoperability. In the entire system, because the speed of video acquisition is usually higher than the speed of application software to acquire and process data, in order to ensure the continuity of video data, a three-buffer structure is adopted. Cache A is the target address of Bt848 video acquisition. Under the direct control of, the collected data is stored in this buffer first; B and C form a ping-pong structure to be used repeatedly. When a frame of data is collected, an interrupt is generated, and the data of buffer A is copied in the interrupt service program. Go to the buffer B (or C), and then collect the next frame, when the next frame of data is collected. Then copy the data in the cache A to the cache C (or B), when the application needs data, read the latest frame of images from the cache B or C. Caches B and C are used interchangeably to ensure that applications reading data from the cache and drivers writing data to the cache do not conflict, avoiding data damage and delay.

2.2.2 Design of Video Compression Coding This design selects object-based MPEG-4 video coding technology. First, the VOP sequence of any shape input is coded by block-based hybrid coding technology. The processing order is intra-frame VOP, inter-frame VOP and bidirectional prediction VOP. After encoding the shape information of the VOP, samples of VOPs of any shape are obtained. Each VOP is divided into disjoint macroblocks by a macroblock grid. Each macroblock contains four 8 × 8 pixel blocks for motion estimation. And compensation and texture coding. The encoded VOP frame is saved in the VOP frame memory. The motion vector is calculated between the current VOP frame and the encoded VOP frame. For the block or macroblock to be encoded, the motion compensation prediction error is calculated. I-VOP and error after motion compensation prediction are coded with 8 × 8 block DCT, and DCP coefficients are quantized, followed by run-length coding and entropy coding. At last. The shape, motion and texture information are combined into a VOL bit stream output. This encoding method can allow users to modify, add or relocate objects in a video scene, and even transform the behavior of objects in the scene. For different bandwidth and computational complexity requirements, it is not necessary to code separately for each case. The same video bitstream can be used, and different parameters are used to select different layers for flexibility. In the event of network congestion, packet loss, etc., it can still provide video images with better consistency. The video compression encoding process stores the encoded video in the encoded video buffer queue, and simultaneously activates or waits for the corresponding processing of the live broadcast process and the storage management process.

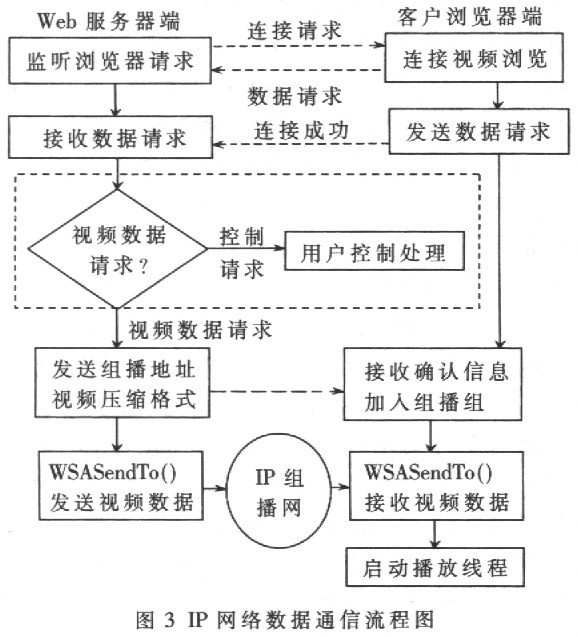

2.2.3 Network communication module design The network communication module is the main part of the system. It contains three data channels: monitoring channel, control channel and video data channel. The monitoring channel is used to transmit the command data for controlling the front-end equipment; the video data channel is used to transmit the video data of each group. The three channels use different communication ports, so the transmission data of each channel is independent of each other. The design and development of the network communication module is realized through the network programming interface (Windows Socket, Winsoek for short). According to the system browser, the network transmission model of the server. On the server side, SOCKET-type listening sockets and control sockets are established; on the client side, SOCKET-type request sockets and control sockets are established. These are all encapsulated and transmitted using the 'ICP protocol.

In addition, a multicast class (CMuhieast) is used on both the server and the client, which is a class specifically encapsulated for video transmission. Derived from CObject, it defines sockets and group sockets that send and receive video data in the SOCKET type, thereby implementing the use of multicast communication to transmit UDP protocol encapsulated video data packets. The IP network data communication process in the system is shown in Figure 3.

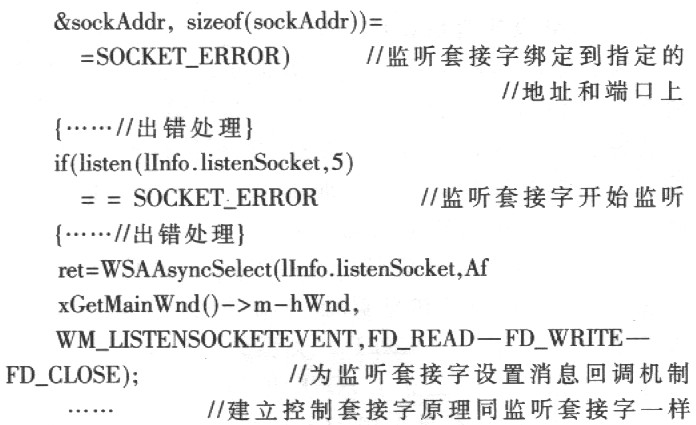

The following shows the procedures for establishing a listening socket and a control socket on the server side.

...

int ret = O:

BOOL bFlag = TRUE: // Set the socket to a reusable port address

ret = setsoekopt (IInfo.listenSocket, SOL_SOCKET, SO_REUSE

ADDR, (char) * & bFlag, sizeof (bFlag));

SOCKADDR_IN sockAddr;

char * addr = severaddr.GetBuffer (0); // Define listening socket

//the address of

sockAddr.sin_family = AF_INET;

sockAddr.sin_addr.S_un.S_addr

= inet_addr (addr);

sockAddr.sin_port = htons (PORT):

if (bind (IInfo.1istenSoeket, (LPSOCKADDR)

2.2.4 Control module design and development The control module realizes the user's control of front-end equipment such as lens, gimbal and screen switching. After receiving the control information frame sent by the monitoring terminal of the customer center, the server judges and analyzes it, and sends it to each corresponding control component interface to realize the corresponding control.

3 Experimental results Connect the web server of the video surveillance system to the local area network, then access Internet, and assign an IP address to the web server. On the user terminal, since a common browser can only display a single screen, it is very inconvenient. Using Microsoft's VC6.0 in conjunction with Microsoft's browser controls, it takes only a few minutes to complete a multi-screen browser software. Enter the address of the video server directly in the address bar of the browser, you can play remote real-time stable and smooth images on the browser page, and achieved good monitoring results.

The web server of the network monitoring system based on embedded Linux video is directly connected to the network without the limitation of cable length and signal attenuation. At the same time, the network has no concept of distance, completely abandoning the concept of region and expanding the control area. And because the video compression and Web functions are concentrated in a very small device, directly connected to the local area network or wide area network, plug and play, the real-time performance, stability, and reliability of the system are greatly improved, without the need for dedicated management, which is very suitable for People on duty environment. With the rapid development of computer technology and network technology, people have higher and higher requirements for video surveillance systems. It is believed that the system has broad application prospects in e-commerce, video conferencing, remote monitoring, remote teaching, telemedicine, water conservancy, and power monitoring.

Mesh Air Adjust Vape 1200-2000

Mesh Air Adjust Vape 1200-2000,E Cigarette 2000 Puffs,Adjustable Vape 2000 Puffs,1500 Puffs E-Cigarette Puff Bar

Shenzhen Niimoo Innovative Technology Co., Ltd , https://www.niimootech.com