At the end of 2017, the Facebook Application Machine Learning Group released the latest paper, introducing the entire Facebook machine learning software and hardware architecture. Looking at the full text, we can also get a glimpse of the machine learning strategies of Facebook products. The paper deals with the new challenges of machine learning on a global scale (100 million data processing), and gives Facebook's coping strategies and solutions, which are extremely meaningful for related industries and research.

Summary

Machine learning has a pivotal position in many of Facebook's products and services. This article will detail how Facebook's hardware and software infrastructure in machine learning can meet its global scale computing needs.

Facebook's machine learning needs are extremely complex: you need to run a lot of different machine learning models. This complexity is already deep in all levels of the Facebook system stack. In addition, a significant portion of all of the data stored by Facebook flows through the machine learning pipeline, and this data load puts tremendous pressure on Facebook's distributed high-performance training stream.

The computational demands are also very tight, and balancing the large amount of CPU capacity for real-time reasoning while maintaining the GPU/CPU platform for training also brings extreme tension. The resolution of these and other challenges remains to be seen in our long-lasting efforts across machine learning algorithms, software and hardware design.

introduction

Facebook's mission is to "build social relationships for human beings and make the world more connected." As of December 2017, Facebook has connected more than 2 billion people worldwide. At the same time, in the past few years, machine learning has also undergone a revolution on such a global scale of practical problems, including a virtuous cycle of machine learning algorithm innovation, massive data for model training, and high-performance computer architecture. progress.

On Facebook, machine learning plays a key role in almost all aspects of improving the user experience, including services such as news feed voice and text translation, and ranking of photos and live video categories.

Facebook uses a variety of machine learning algorithms in these services, including support vector machines, gradient boosted decision trees, and many types of neural networks. This article will introduce several important aspects of Facebook's data center architecture to support machine learning needs. Its architecture includes internal "ML-as-a-Service" streams, open source machine learning frameworks, and distributed training algorithms.

From a hardware perspective, Facebook uses a large number of CPU and GPU platforms to train the model to support the training frequency of the model for the required service delay time. For the machine learning reasoning process, Facebook relies mainly on the CPU to handle all major services, and the neural network ranking service (such as news push) occupies the bulk of all computing load.

A large part of the vast amount of data stored by Facebook goes through the machine learning pipeline, and in order to improve the quality of the model, the amount of data in this part is still increasing over time. The sheer volume of data needed to provide machine learning services is a challenge that Facebook's data centers will face on a global scale.

Currently available techniques that can be used to efficiently provide data to models include decoupling of data feedback and training, data/computing co-location, and network optimization. At the same time, Facebook's large computing and data scale itself presents a unique opportunity. During the daily load cycle, a large number of CPUs that can be used for distributed training algorithms are idle during off-peak hours.

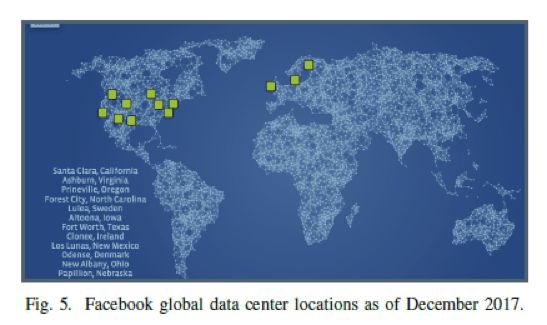

Facebook's computing cluster (fleet) involves dozens of data centers, so the large scale also provides a disaster tolerance. Timely delivery of a new machine learning model is very important for the operation of the Facebook business. To ensure this, disaster recovery planning is also critical.

Looking ahead, Facebook hopes to see a rapid increase in the frequency of machine learning used in its existing and new services. Of course, this growth will also pose a more serious challenge to the global scale of the team responsible for these service architectures. While optimizing the infrastructure on existing platforms is a significant opportunity for the company, we are still actively evaluating and exploring new hardware solutions while maintaining a focus on algorithm innovation.

The main contents of this article (Facebook's view of machine learning) include:

Machine learning is being widely used in almost all of Facebook's services, and computer vision is only a small part of the resource requirements.

The large number of machine learning algorithms that Facebook requires are extremely complex, including but not limited to neural networks.

Our machine learning pipeline is processing massive amounts of data, and this brings engineering and efficiency challenges beyond compute nodes.

Facebook's current reasoning process relies mainly on the CPU, and the training process relies on both CPU and GPU. However, from the perspective of performance-to-power ratio, new hardware solutions should be continuously explored and evaluated.

Devices used by users around the world can reach hundreds of millions of devices per day, and this will provide a large number of machines that can be used for machine learning tasks, such as large-scale distributed training.

Facebook machine learning

Machine Learning (ML) is an application example that uses a series of inputs to create a tunable model and use it to create a representation, prediction, or other form of useful signal.

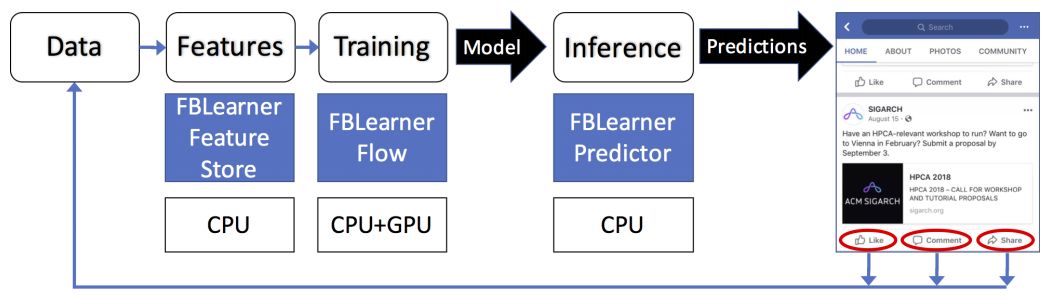

Figure 1. Example of Facebook's machine learning process and architecture

The flow shown in Figure 1 consists of the following steps, alternating:

Establish the training phase of the model. This phase is usually run offline.

Run the inference phase of the training model in the application and perform (a set of) real-time predictions. This phase is performed online.

Models are trained much less frequently than reasoning—the scale of reasoning is constantly changing, but it is usually around a few days. Training also takes a long time to complete, usually hours or days. At the same time, depending on the actual needs of the product, the online reasoning phase may run hundreds of thousands of times per day, and generally needs to be performed in real time. In some cases, especially for referral systems, additional training needs to be done online in such a continuous manner.

On Facebook, a notable feature of machine learning is the sheer volume of data available for model training. The scale of this data will bring a lot of impact on the entire machine learning architecture.

Main services using machine learning

Message push

The message push ranking algorithm enables users to see the most important things for them each time they visit Facebook. The general model uses training to determine the various user and environmental factors that influence the ordering of the content. Later, when the user visits Facebook, the model generates an optimal push from thousands of candidates, which is a personalized collection of images and other content, and the best sorting of the selected content.

advertising

The advertising system uses machine learning to determine what kind of advertisements to display to a particular user. By training the ad model, we can understand user characteristics, user context, previous interactions, and ad attributes to learn how to predict the most likely clicks on the site. Later, when the user visits Facebook, we pass the input into the trained model run, and we can immediately determine which ads to display.

search for

The search launches a series of specific sub-search processes for various vertical types (eg, videos, photos, people, events, etc.). The classifier layer runs before various types of vertical type searches to predict which of the vertical types to search for, otherwise such vertical type searches will be invalid. The classifier itself and various vertical searches contain a training offline phase, and an online phase that runs the model and performs classification and search functions.

Sigma

Sigma is a general framework for classification and anomaly detection for monitoring a variety of internal applications, including site integrity, spam detection, payment, registration, unauthorized employee access, and event recommendations. Sigma contains hundreds of different models that run every day in production, and each model is trained to detect anomalies or more generally classify content.

Lumos

Lumos is able to extract advanced attributes and mappings from images and their content so that the algorithm can automatically understand them. This data can be used as input to other products and services, such as in the form of text.

Facer

Facer is Facebook's face detection and recognition framework. Given an image, it first looks for all the faces in the image. It is then determined whether the face in the figure is a friend of the user by running a face recognition algorithm for a particular user. Facebook uses this service to recommend users who want to be tagged in photos.

language translation

Language translation is a service that involves the international exchange of Facebook content. Facebook supports source or target language translations in more than 45 languages, which means that Facebook supports more than 2,000 translation directions, such as English to Spanish, Arabic to English. Through these more than 2,000 translation channels, Facebook provides a 4.5-word translation service every day. By translating users' messages, Facebook can relieve language barriers for 600 million people worldwide. Currently, each language has its own model for direction, but we are also considering a multilingual model [6].

Speech Recognition

Speech recognition is a service that converts an audio stream into text. It can automatically fill subtitles for video. At present, most of the streaming media is in English, but the recognition of other languages ​​in the future will also be supported. In addition, non-verbal audio files can also be detected with a similar system (a simpler model).

In addition to the main products mentioned above, there are more long tail services that take advantage of various forms of machine learning. Facebook products and services have hundreds of long tails.

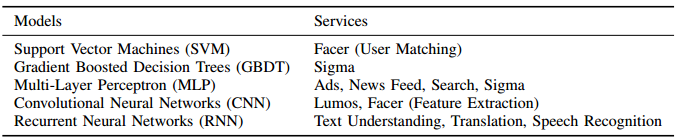

Machine learning model

All machine learning-based services use "features" (or inputs) to produce quantized output. The machine learning algorithms used by Facebook include Logistic Regression (LR), Support Vector Machine (SVM), Gradient Elevation Decision Tree (GBDT) and Deep Neural Network (DNN).

LR and SVM are very effective in training and forecasting. GBDT can increase accuracy by increasing computing resources. DNN is the most expressive, providing the highest accuracy, but with the most resources (in terms of computational complexity, at least an order of magnitude higher than linear models such as LR and SVM).

The free parameters of these three models are becoming more and more, and the accuracy of prediction must be optimized by using labeled input examples.

In deep neural networks, there are three types of networks that are frequently used: multilayer perceptron (MLP), convolutional neural network (CNN), and recurrent neural network (RNN/LSTM). MLP networks typically run on structured input features (usually rankings), RNN / LSTM networks are typically used to process time domain data, ie as sequence processors (usually language processing), and relative CNNs are a type of processing. A tool for spatial data (usually image processing). Table I shows the mapping between these machine learning model types and products/services.

Table 1 Products or services that utilize machine learning algorithms

ML-as-a-Service in Facebook

To simplify the task of applying machine learning to our products, we built some internal platforms and toolkits, including FBLearner, Caffe2 and PyTorch. FBLearner is a suite of three tools (FBLearner Feature Store, FBLearner Flow, FBLearner Predictor), each of which is responsible for the different parts of the machine learning pipeline. As shown in Figure 1 above, it utilizes an internal job scheduler to allocate resources and schedule jobs across the shared resource pool of the GPU and CPU. The training process for most of the machine learning models on Facebook is done on the FBLearner platform. These tools and platforms are designed to help machine learning engineers increase efficiency and focus on algorithm innovation.

FBLearner Feature Store. The starting point for any machine learning modeling task is to collect and generate features. The FBLearner Feature Store is essentially a catalog of feature generators whose feature generators can be used for training and real-time forecasting, but it can also be used as a market place for multiple teams to share and find features. This is a good platform for a team that is just beginning to use machine learning, and it also helps to apply new features to existing models.

FBLearner Flow is a machine learning platform that Facebook uses to train models. Flow is a pipeline management system that performs a workflow that describes the steps required to model and/or evaluate the model and the resources it requires. This workflow consists of discrete units or operators, each with inputs and outputs. The connection between the operators is automatically inferred by tracking the flow of one operator to the next operator, and Flow performs the workflow by processing the scheduling and resource management. Flow also has a tool for experiment management and a simple user interface that tracks all the artifacts and metrics generated by each workflow or experiment, making it easy to compare and manage these experiments.

FBLearner Predictor is Facebook's internal reasoning engine that provides real-time predictions using models trained in Flow. Predictor can be used as a multi-tenancy service or as a library integrated into the backend services of a specific product. Many of Facebook's product teams use Predictor, and many of them require low latency solutions. Direct integration between Flow and Predictor also helps run online experiments and manage multiple versions of the model in production.

Deep learning framework

We use two distinct collaborative frameworks for deep learning on Facebook: PyTorch for research optimization and Caffe2 for production optimization.

Caffe2 is Facebook's in-house production framework for training and deploying large-scale machine learning models. Caffe2 focuses on several key features required for the product: performance, cross-platform support and basic machine learning algorithms such as Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN) and Multilayer Perceptron (MLP). These networks all have sparse or dense connections and parameters of up to tens of billions. The framework is designed in a modular approach that shares a unified graph representation across all backend implementations (CPU, GPU, and accelerator). To achieve optimal runtime on different platforms, Caffe2 also abstracts third-party libraries including cuDNN, MKL and Meta.

PyTorch is Facebook's preferred framework for AI research. Its front end focuses on flexibility, debugging, and dynamic neural networks to enable rapid experimentation. Because it relies on Python to execute, it is not optimized for production and mobile deployment. When a research project produces valuable results, the model needs to be transferred to production. In the past, in a production environment, we completed the model transfer by rewriting the training pipeline of the product environment using other frameworks. Recently, Facebook began to build the ONNX toolchain to simplify this transfer process. For example, dynamic neural networks are used for sophisticated artificial intelligence research, but these models take longer to be applied to products. Through the decoupling framework, we avoid the need to design more complex execution engines (such as Caffe2) to meet performance. In addition, researchers are more concerned with their flexibility when conducting research than the speed of the model. For example, in the model exploration phase, a 30% performance degradation can be tolerated, especially when it has the advantages of easy testing and model visualization. But the same method is not suitable for production. This trade-off principle can also be seen in the framework design of PyTorch and Caffe2. PyTorch provides good default parameters and reasonable performance, while Caffe2 can choose to use asynchronous graph execution, quantization weights and multiple dedicated backends to achieve Best performance.

Although the FBLearner platform itself does not restrict the use of any framework, whether it is Caffe2, TensorFlow, PyTorch or other frameworks, our AI Software Platform team is specifically designed to allow FBLearner to integrate well with Caffe2. optimization. In general, the separation of research and production frameworks (PyTorch and Caffe2, respectively) allows us to operate flexibly on both sides, reducing the number of constraints while adding new features.

The ONNX. Deep Learning Tools ecosystem is still in its infancy in the entire industry. Different tools have different advantages for different subsets of problems, and there are different tradeoffs in terms of flexibility, performance, and support platforms, just like the tradeoffs we have described for PyTorch and Caffe2. Therefore, there is a great need to exchange training models between different frameworks or platforms. To make up for this shortcoming, at the end of 2017, Facebook and several partners jointly launched the Open Neural Network Exchange (ONNX). ONNX is a format that represents a deep learning model in a standard way to achieve interoperability between different frameworks and vendor-optimized libraries. At the same time, it meets the need to exchange trained models between different frameworks or platforms. ONNX is designed as an open specification that allows framework authors and hardware vendors to contribute to it and has various converters between the framework and the library. Facebook is working hard to make ONNX a collaborative partner between all of these tools, rather than an exclusive official standard.

Within Facebook, ONNX is the primary means by which we move our research model from the PyTorch environment to the high-performance production environment in Caffe2, which enables automatic capture of models and conversion of fixed parts.

Within Facebook, ONNX is the primary means by which we move our research model from the PyTorch environment to the high-performance production environment in Caffe2. ONNX provides the ability to automatically capture and transform the static part of the model. We have an extra toolchain that can be used to map models from Python to dynamic graphs by mapping them to control flow primitives in Caffe2 or reimplementing them with C++ as custom operators.

Resource requirements for machine learning

Given the different resource requirements, frequency, and duration of machine learning during the training and inference phases, we will discuss the details and resource applications of these two phases separately.

Facebook hardware resources overview

Facebook's infrastructure department has long since built an efficient platform for major software services, including customized server, storage, and network support for each major workload's resource requirements.

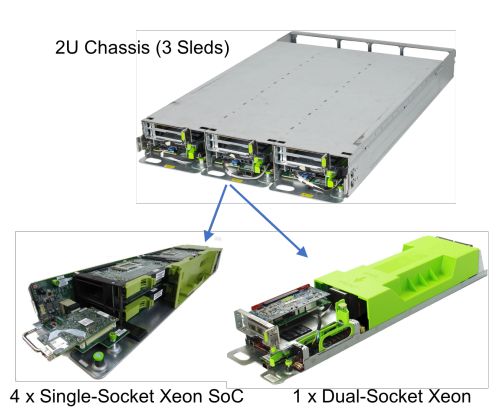

Figure 2 CPU-based computing server. There are four Monolake server cards on the single-socket server base and a two-socket server base on the two-socket server base, so there are three two-socket servers in the 2U chassis. So there are 12 servers in the 2U form combination.

Currently, Facebook offers about eight major computing and storage architectures, corresponding to eight major services. These major architectural types are sufficient to meet the resource requirements of Facebook's main services. For example, Figure 2 shows a 2U rack that can accommodate three compute Sleds modules that support two server types. One of the Sled modules is a single-socket CPU server (1xCPU), which is mostly used in the Web layer - a stateless service that mainly focuses on throughput, so you can use a more energy-efficient CPU (Broadwell-D processor); There are fewer DRAMs (32GB) and motherboard hard drives or flash memory.

Another Sled module is a larger two-socket CPU server (2x high-power Broadwell-EP or Skylake SP CPU) with a large amount of DRAM, often used for services involving large amounts of computing and storage.

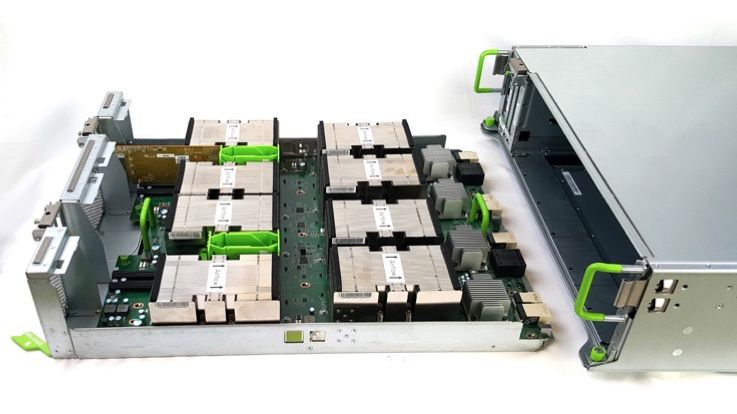

Figure 3. Big Basin GPU server with 8 GPUs (3U rack)

As the neural network we train is getting bigger and deeper, we have developed a Big Basin GPU server (shown in Figure 3), which is our latest GPU server in 2017. The original Big Basin GPU server was equipped with eight interconnected NVIDIA Tesla P100 GPU accelerators, which used NVIDIA NVLink to form an eight-CPU hybrid cube grid, which was later refined and applied to the V100 GPU.

Big Basin is the successor to the earlier Big Sur GPU, the first widely used high-performance AI computing platform in the Facebook data center to support NVIDIA, developed in 2015 and released through the Open Compute Project. M40 GPU.

Compared to Big Sur, the V100 Big Basin delivers higher performance per watt of power, thanks to a single-precision floating-point unit—each GPU operating at 7 teraflops (teraflops per second) Increased to 15.7 teraflops and high bandwidth video memory (HBM2) with 900GB/s bandwidth. This new architecture also doubles the speed of half-precision operations, further increasing the throughput of the operation.

Because Big Basin's computational throughput is larger and memory is increased from 12 GB to 16 GB, it can be used to train models that are 30% larger than previous models. High-bandwidth NVLink interconnect GPU communication also enhances distributed training. In tests using the ResNet-50 image classification model, Big Basin's computational throughput is 300% higher than that of Big Sur, which allows us to train more complex models faster than ever before.

Facebook announced the design of all of these computing servers and several storage platforms through the Open Compute Project.

Resource requirements for offline training

Currently, different products use different computing resources to complete their respective offline training steps. Some products (such as Lumos) do all the training on the GPU. Other products, such as Sigama, do all the training on a two-socket CPU compute server. Products such as Facer use a two-stage training process that trains on the GPU with a small frequency (monthly) team-wide face detection and recognition model, and then on very high frequencies on thousands of 1x CPU servers. Specific training is performed on each user's model.

In this section, we will focus on the details of the various services around the machine learning training platform, training frequency and duration, and summarize them in Table II. In addition, we discuss the trends in data sets and the implications of these trends for computing, memory, storage, and network architecture.

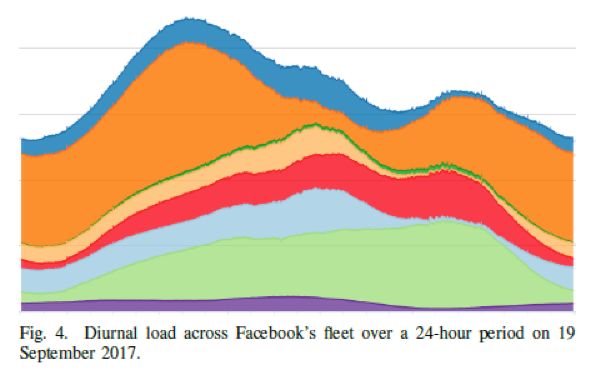

Calculate the location of the type and relative data source. Offline training can be done either on the CPU or on the GPU, depending on the service itself. Although in most cases, the model trained on the GPU is better than the model trained on the CPU, the CPU's powerful off-the-shelf computing power makes it a very useful platform. This is especially true during the off-peak hours of the day, because CPU resources are not available during this time, as illustrated in Figure 4 below. Below we present the correspondence between the service and computing resource training models:

Training model services on the GPU: Lumos, speech recognition, language translation

Training model services on the CPU: News Feed, Sigma

Services for training models on GPUs and CPUs: Facer (a generic model that is trained every few years on the GPU, such models are more stable; user-specific models trained on 1x CPUs, such models can be used to process new image data ), search (using multiple independent vertical search engines, using the classifier that can make predictions to launch the most appropriate vertical search engine).

Currently, GPUs are primarily used for offline training, rather than providing real-time data to users. Because most GPU architectures are optimized for computational throughput to overcome the latency disadvantage. At the same time, because the training process relies heavily on data obtained from large data generation libraries, the GPU must be close to the data source for performance and bandwidth reasons. As the amount of data used by the training model grows quite fast, it is becoming increasingly important that the GPU is close to the data source.

Memory, Storage, and Network: From the perspective of internal memory capacity, both CPU and GPU platforms provide sufficient storage for training. Even for applications like Facer, you can train user-specific SVM models with 32GB of RAM on a 1x CPU. If you can make the most efficient use of the platform and the excess storage capacity, the overall training efficiency of the platform will be very good.

| service | Resource | Training frequency | Duration of training |

| News Feed | Single slot CPUs | Once a day | Hours |

| Facer | GPUs + single-slot CPUs | Every N photos | a few seconds |

| Lumos | GPUs | Once every few months | Hours |

| search for | Vertical Dependent | Once per hour | Hours |

| language translation | GPUs | once a week | a few days |

| Sigma | Dual slot CPUs | Several times a day | Hours |

| Speech Recognition | GPUs | once a week | Hours |

Table II Frequency, duration and resources of offline training for different services

Machine learning systems rely on training using instance data. Facebook uses a lot of data in the machine learning data pipeline. This makes computing resources tend to be close to the database.

Over time, most services will show a trend to take advantage of accumulated user data, which will make these services more dependent on other Facebook services and require more network bandwidth to capture data. Therefore, only large storage is deployed at or near the data source to transfer data from remote locations on a large scale, thereby avoiding shutting down the training pipeline in order to wait for more sample data.

When deploying the location of the training machine, we can also use this method to avoid excessive stress on the training resources of the training fleet.

The amount of data used by different services during offline training varies greatly. The training data set for almost all services shows a trend of continuous growth or even large growth. For example, some services use millions of rows of data before the ROI is reduced, others use tens of billions of rows of data (more than 100 terabytes) and are limited only by resources.

Scaling considerations and distributed training: The process of training neural networks involves the use of stochastic gradient descent (SGD) to optimize parameter weights. This method is used to fit the neural network and iteratively update the weights by evaluating a small subset of the tag instances (ie "batch" or "mini-batch"). In data parallelism, the network generates multiple model copies (parallel instances) to process multiple batches of data in parallel.

When using a machine to train the model, the larger or deeper the model will bring better training results and higher accuracy, but training such models often requires more samples to be processed. When training with a single machine, we can maximize the training by increasing the number of model copies and performing data parallelism across multiple GPUs.

As the amount of data required for training increases over time, hardware limitations can result in an increase in overall training delay and convergence time. However, we can use distributed training to overcome these hardware limitations and reduce latency. This area of ​​research is quite popular in Facebook and the entire AI research community.

A common assumption is that implementing data parallelism on different machines requires a specialized interconnection mechanism. However, in our research on distributed training, we found that an Ethernet-based network can provide approximately linear scalability. The ability to achieve an approximately linear extension is closely related to the size of the model and the network bandwidth.

If the network bandwidth is too small, it takes more time to perform parameter synchronization than to perform gradient calculations, and the advantages of data parallelism on different machines are greatly reduced. With a 50G Ethernet NIC, we can use the Big Basin server to extend the training of the visual model, and the synchronization between machines does not cause problems at all.

In all cases, updates require synchronization (each replica sees the same state), consistency (each replica generates the correct update), and performance (sublinear scaling) techniques to share with other replicas, which may affect Training quality. For example, translation services are currently unable to perform large-scale mini-batches training without degrading the quality of the model.

Conversely, if we use specific hyperparameter settings, we can train image classification models on very large mini-batch datasets and scale to more than 256 GPUs.

Experiments have shown that in a large service on Facebook, performing data parallelism on a 5x machine can achieve 4 times the training efficiency (for example, training a group of models with training time of more than 4 days, in the past, a total of 100 different models can be trained. The machine cluster now only trains the same 20 models per day, and the training efficiency is reduced by 20%, but the potential engineering progress wait time is reduced from 4 days to 1 day).

If the model becomes super large, then parallel training can be used to group and distribute the layers of the model to optimize training efficiency, and the activation unit can be passed between machines. Optimization may be related to network bandwidth, latency, or balancing internal machine limits. This increases the end-to-end delay of the model, so the enhancement of raw performance in each time step is usually associated with a decrease in step quality. This may further reduce the accuracy of the model at each step. The decrease in the accuracy of each step will eventually accumulate, so that we can get the optimal number of steps for parallel processing.

The design of the DNN model itself allows it to run on only one machine. In the inferential phase, splitting the model diagram between machines usually leads to a large amount of communication between the machine and the machine. But Facebook's main service will constantly weigh the pros and cons of the extended model. These considerations can determine changes in network capacity requirements.

| service | Relative computing power | Calculation | RAM |

| News Feed | 100X | Two-slot CPU | high |

| Facer | 10X | Single slot CPU | low |

| Lumos | 10X | Single slot CPU | low |

| search for | 10X | Two-slot CPU | high |

| language translation | 1X | Two-slot CPU | high |

| Sigma | 1X | Two-slot CPU | high |

| Speech Recognition | 1X | Two-slot CPU | high |

Table III Resource requirements for online reasoning services.

Resource requirements for online reasoning

In the line reasoning step after completing the offline training, we need to load the model into the machine and use the real-time input to run the model to generate real-time results of the website traffic.

Next we will discuss, an online inference model in practice - the ad ranking model. This model can filter thousands of ads and display ads from 1 to 5 in message feeds. This process is accomplished by progressively complex ranking operations on successively reduced subsets of advertisements.

Each round of operations uses a model similar to the Multilayer Perception Model (MLP), which includes a sparse embedded layer, and each round of operations reduces the number of ads. The sparse embedded layer requires a lot of memory, so when it comes to the latter operation, the model has more hyperparameters and it will run on a server that is independent of the MLP round.

From a computational point of view, the vast majority of online reasoning runs on a large number of 1xCPUs (single sockets) or 2xCPUs (two sockets). Since 1xCPU is more performant and more cost-effective for Facebook services, Facebook advocates using the 1xCPU server training model whenever possible.

With the advent of high-performance mobile hardware, Facebook can even run certain models directly on users' mobile devices to improve latency and reduce communication costs. However, some services that require a lot of computing and memory resources still require 2x CPUs for optimal performance.

Different products have different delay requirements when deriving the results of online reasoning. In some cases, the resulting data may be “excellent†and may be re-entered into the model after returning a preliminary quick assessment to the user. For example, it may be acceptable to classify a content as qualified in some cases, but this preliminary classification result will be overturned when running a more complex model.

Models such as ad rankings and message pushes have a stable SLA that can push the right content to the user. These SLAs determine the complexity and dependencies of the model, so if we have more powerful computing power, we can train more advanced models.

Machine learning data calculation

In addition to resource requirements, there are a number of important factors to consider when deploying machine learning in the data center, including the need for critical data and the reliability of natural disasters.

From getting data to model

Many of Facebook's machine learning models, the main factor for success, are extensive and high quality data available. The ability to quickly process and provide this data to machine learning models ensures that we deploy fast and efficient offline training.

For complex machine learning applications, such as advertising and ranking, the amount of data required for each training task is over hundreds of terabytes. In addition, the use of complex pre-processing logic ensures that data is cleaned and normalized for efficient migration and easier learning. These operations have very high resource requirements, especially for storage, network and CPU requirements.

As a general solution, we try to decouple the data in the training workload. Both workloads have very significant features. On the one hand, it is very complex, temporary, business-dependent, and fast-changing. On the other hand, training workloads are usually fixed (eg GEMM), stable (relatively small core business), highly optimized, and more preferred to work in a "clean" environment (eg, exclusive cache usage and minimal threading) Fight for).

为了优化这两者,我们在物ç†ä¸Šå¯¹ä¸åŒçš„机器的ä¸åŒå·¥ä½œè´Ÿè½½è¿›è¡Œéš”离。数æ®å¤„ç†æœºå™¨ï¼Œåˆå“readersâ€ï¼Œä»Žå˜å‚¨å™¨ä¸è¯»å–æ•°æ®ï¼Œå¤„ç†å’ŒåŽ‹ç¼©å®ƒä»¬ï¼Œç„¶åŽå°†ç»“æžœå馈给一个å«åšâ€œtrainersâ€çš„è®ç»ƒæœºå™¨ã€‚å¦ä¸€æ–¹é¢ï¼Œtrainersåªä¸“注于快速有效地执行任务。readerså’Œtrainerså¯ä»¥åˆ†å¸ƒä»¥ä¾¿æ供更çµæ´»æ€§å’Œå¯æ‰©å±•æ€§çš„应用。æ¤å¤–,我们还优化了ä¸åŒå·¥ä½œè´Ÿè·çš„机器é…置。

å¦ä¸€ä¸ªé‡è¦çš„ä¼˜åŒ–æŒ‡æ ‡æ˜¯ç½‘ç»œä½¿ç”¨ã€‚è®ç»ƒè¿‡ç¨‹äº§ç”Ÿçš„æ•°æ®æµé‡éžå¸¸é‡è¦çš„,并且有时候会çªç„¶äº§ç”Ÿã€‚如果没有智能化处ç†çš„è¯ï¼Œè¿™å¾ˆå®¹æ˜“就会导致网络设备的饱和,甚至干扰到其他æœåŠ¡ã€‚为了解决这些问题,我们采用压缩优化,调度算法,数æ®/计算布局ç‰ç‰æ“作。

利用规模

作为一家为用户æä¾›æœåŠ¡çš„å…¨çƒæ€§å…¬å¸ï¼ŒFacebookå¿…é¡»ä¿æŒå¤§é‡æœåŠ¡å™¨çš„设计能够满足在任何时间段内的峰值工作负载。如图所示,由于用户活动的å˜åŒ–å–决于日常负è·ä»¥åŠç‰¹æ®Šäº‹ä»¶ï¼ˆä¾‹å¦‚地区节å‡æ—¥ï¼‰æœŸé—´çš„å³°å€¼ï¼Œå› æ¤å¤§é‡çš„æœåŠ¡å™¨åœ¨ç‰¹å®šçš„时间段内通常是闲置的。

这就释放了éžé«˜å³°æ—¶æ®µå†…大é‡å¯ç”¨çš„计算资æºã€‚利用这些å¯èƒ½çš„异构资æºï¼Œä»¥å¼¹æ€§æ–¹å¼åˆç†åˆ†é…ç»™å„ç§ä»»åŠ¡ã€‚这是Facebookç›®å‰æ£åŠªåŠ›æŽ¢ç´¢çš„一大机会。对于机器å¦ä¹ 应用程åºï¼Œè¿™æ供了将å¯æ‰©å±•çš„分布å¼è®ç»ƒæœºåˆ¶çš„优势应用到大é‡çš„异构资æºï¼ˆä¾‹å¦‚具有ä¸åŒRAM分é…çš„CPUå’ŒGPUå¹³å°ï¼‰çš„机会。但是,这也会带æ¥ä¸€äº›æŒ‘战。在这些低利用率的时期,大é‡å¯ç”¨çš„计算资æºå°†ä»Žæ ¹æœ¬ä¸Šå¯¼è‡´åˆ†å¸ƒå¼è®ç»ƒæ–¹æ³•çš„ä¸åŒã€‚

调度程åºé¦–先必须æ£ç¡®åœ°å¹³è¡¡è·¨è¶Šå¼‚æž„ç¡¬ä»¶çš„è´Ÿè½½ï¼Œè¿™æ ·ä¸»æœºå°±ä¸å¿…为了åŒæ¥æ€§è€Œç‰å¾…其他进程的执行。当è®ç»ƒè·¨è¶Šå¤šä¸ªä¸»æœºæ—¶ï¼Œè°ƒåº¦ç¨‹åºè¿˜å¿…é¡»è¦è€ƒè™‘网络拓扑结构和åŒæ¥æ‰€éœ€çš„æˆæœ¬ã€‚如果处ç†ä¸å½“,机架内或机架间åŒæ¥æ‰€äº§ç”Ÿçš„æµé‡å¯èƒ½ä¼šå¾ˆå¤§ï¼Œè¿™å°†æžå¤§åœ°é™ä½Žè®ç»ƒçš„速度和质é‡ã€‚

例如,在1xCPU设计ä¸ï¼Œå››ä¸ª1xCPU主机共享一个50G的网å¡ã€‚如果全部四å°ä¸»æœºåŒæ—¶å°è¯•ä¸Žå…¶ä»–主机的梯度进行åŒæ¥ï¼Œé‚£ä¹ˆå…±äº«çš„网å¡å¾ˆå¿«å°±ä¼šæˆä¸ºç“¶é¢ˆï¼Œè¿™ä¼šå¯¼è‡´æ•°æ®åŒ…下é™å’Œè¯·æ±‚è¶…æ—¶ã€‚å› æ¤ï¼Œç½‘络之间需è¦ç”¨ä¸€ä¸ªå…±åŒçš„设计拓扑和调度程åºï¼Œä»¥ä¾¿åœ¨éžé«˜å³°æ—¶æ®µæœ‰æ•ˆåœ°åˆ©ç”¨é—²ç½®çš„æœåŠ¡å™¨ã€‚å¦å¤–ï¼Œè¿™æ ·çš„ç®—æ³•è¿˜å¿…é¡»å…·å¤‡èƒ½å¤Ÿæ供检查指å‘åœæ¢åŠéšç€è´Ÿè·å˜åŒ–é‡æ–°å¼€å§‹è®ç»ƒçš„能力。

ç¾éš¾åŽæ¢å¤èƒ½åŠ›

èƒ½å¤Ÿæ— ç¼åœ°å¤„ç†Facebook一部分全çƒè®¡ç®—,å˜å‚¨å’Œç½‘络足迹的æŸå¤±ï¼Œä¸€ç›´æ˜¯FacebookåŸºç¡€è®¾æ–½çš„ä¸€ä¸ªé•¿æœŸç›®æ ‡ã€‚Facebookçš„ç¾éš¾æ¢å¤å°ç»„ä¼šå®šæœŸåœ¨å†…éƒ¨è¿›è¡Œæ¼”ä¹ ï¼Œæ‰¾å‡ºå¹¶è¡¥æ•‘å…¨çƒåŸºç¡€è®¾æ–½ä¸æœ€è–„å¼±çš„çŽ¯èŠ‚å’Œè½¯ä»¶å †æ ˆã€‚å¹²æ‰°è¡ŒåŠ¨åŒ…æ‹¬åœ¨å‡ ä¹Žæ²¡æœ‰ä»»ä½•é€šçŸ¥æƒ…å†µä¸‹ï¼Œè¿›è¡Œæ•´ä¸ªæ•°æ®ä¸å¿ƒç¦»çº¿å¤„ç†ä»¥ç¡®è®¤æˆ‘们全çƒæ•°æ®ä¸å¿ƒçš„æŸå¤±å¯¹ä¸šåŠ¡é€ æˆæœ€å°çš„干扰值。

对于机器å¦ä¹ çš„è®ç»ƒå’ŒæŽ¨ç†éƒ¨åˆ†ï¼Œå®¹ç¾çš„é‡è¦æ€§æ˜¯ä¸è¨€è€Œå–»çš„ã€‚å°½ç®¡é©±åŠ¨å‡ ä¸ªå…³é”®æ€§é¡¹ç›®çš„æŽ¨ç†è¿‡ç¨‹å分é‡è¦è¿™ä¸€è§‚点以并ä¸è®©äººæ„外,但在注æ„åˆ°å‡ ä¸ªå…³é”®äº§å“çš„å¯æµ‹é‡é€€åŒ–之å‰å‘现其对频ç¹è®ç»ƒçš„ä¾èµ–性ä¾ç„¶æ˜¯ä¸€ä¸ªçš„惊喜。

下文讨论了三ç§ä¸»è¦äº§å“频ç¹æœºå™¨å¦ä¹ è®ç»ƒçš„é‡è¦æ€§ï¼Œå¹¶è®¨è®ºä¸ºé€‚应这ç§é¢‘ç¹çš„è®ç»ƒæ‰€éœ€è¦çš„基础架构支æŒï¼Œä»¥åŠè¿™ä¸€åˆ‡æ˜¯å¦‚何耦åˆåˆ°ç¾éš¾åŽæ¢å¤æ€§çš„。

如果ä¸è®ç»ƒæ¨¡åž‹ä¼šå‘生什么?我们分æžäº†ä¸‰ä¸ªåˆ©ç”¨æœºå™¨å¦ä¹ è®ç»ƒçš„关键性æœåŠ¡ï¼Œä»¥ç¡®å®šé‚£äº›ä¸èƒ½é¢‘ç¹æ‰§è¡Œæ“作æ¥è®ç»ƒæ›´æ–°æ¨¡åž‹ï¼ˆåŒ…括广告,新闻)和社区诚信所带æ¥çš„å½±å“ã€‚æˆ‘ä»¬çš„ç›®æ ‡æ˜¯ç†è§£åœ¨å¤±åŽ»è®ç»ƒæ¨¡åž‹èƒ½åŠ›çš„一个星期,一个月,å…个月时间内所带æ¥çš„å½±å“。

第一个明显的影å“æ˜¯å·¥ç¨‹å¸ˆçš„æ•ˆçŽ‡ï¼Œå› ä¸ºæœºå™¨å¦ä¹ 的进度通常与频ç¹çš„实验相关。虽然许多模型å¯ä»¥åœ¨CPU上进行è®ç»ƒï¼Œä½†æ˜¯åœ¨GPU上è®ç»ƒå¾€å¾€èƒ½å¤Ÿæ˜¾è‘—地æå‡æ¨¡åž‹æ€§èƒ½ã€‚è¿™äº›åŠ é€Ÿæ•ˆæžœèƒ½å¤Ÿè®©æ¨¡åž‹è¿ä»£æ—¶é—´æ›´å¿«ï¼Œä»¥åŠå¹¶å¸¦æ¥æŽ¢ç´¢æ›´å¤šæƒ³æ³•çš„èƒ½åŠ›ã€‚å› æ¤ï¼ŒGPUçš„æŸå¤±å°†å¯¼è‡´è¿™äº›å·¥ç¨‹å¸ˆç”Ÿäº§åŠ›ä¸‹é™ã€‚

æ¤å¤–,我们确定了这个问题对Facebook产å“çš„é‡å¤§å½±å“,特别是对频ç¹åˆ·æ–°å…¶æ¨¡åž‹çš„产å“。 我们总结了这些产å“使用旧模型时出现的问题。

ç¤¾äº¤å®‰å…¨ï¼šåˆ›é€ ä¸€ä¸ªå®‰å…¨çš„åœ°æ–¹è®©äººä»¬åˆ†äº«å’Œè¿žæŽ¥æ˜¯Facebookçš„æ ¸å¿ƒä½¿å‘½ã€‚ è¿…é€Ÿè€Œå‡†ç¡®åœ°æ£€æµ‹æ”»å‡»æ€§å†…å®¹æ˜¯è¿™é¡¹ä»»åŠ¡çš„æ ¸å¿ƒã€‚æˆ‘ä»¬çš„ç¤¾äº¤å®‰å…¨å›¢é˜Ÿå分ä¾èµ–使用机器å¦ä¹ 技术æ¥æ£€æµ‹æ”»å‡»æ€§çš„内容文å—,图åƒå’Œè§†é¢‘。攻击性内容检测是一ç§åžƒåœ¾é‚®ä»¶æ£€æµ‹çš„专门形å¼ã€‚对抗者会ä¸æ–地寻找新的ã€åˆ›æ–°æ€§çš„方法æ¥ç»•è¿‡æˆ‘ä»¬çš„æ ‡è¯†ç¬¦ï¼Œå‘我们的用户展示令人å感的内容。Facebookç»å¸¸è®ç»ƒæ¨¡åž‹åŽ»å¦ä¹ 这些新的模å¼ã€‚æ¯æ¬¡è®ç»ƒè¿ä»£éƒ½è¦èŠ±è´¹å‡ 天的时间æ¥ç”Ÿæˆç”¨äºŽæ£€æµ‹ä»¤äººå感的图åƒçš„精确模型。 我们æ£åœ¨ç»§ç»æŽ¨åŠ¨ä½¿ç”¨åˆ†å¸ƒå¼è®ç»ƒæŠ€æœ¯æ¥æ›´å¿«åœ°è®ç»ƒæ¨¡åž‹çš„边界,但是ä¸å®Œå…¨çš„è®ç»ƒä¼šå¯¼è‡´å†…容退化。

消æ¯æŽ¨é€ï¼šæˆ‘们的å‘现并ä¸ä»¤äººæƒŠè®¶ï¼Œåƒæ¶ˆæ¯æŽ¨é€æ ·çš„产å“对机器å¦ä¹ 和频ç¹çš„模型è®ç»ƒä¾èµ–很大。在用户æ¯æ¬¡è®¿é—®æˆ‘们网站的过程ä¸ï¼Œä¸ºå…¶ç¡®å®šæœ€ç›¸å…³çš„内容,éžå¸¸ä¾èµ–先进的机器å¦ä¹ 算法æ¥æ£ç¡®æŸ¥æ‰¾å’ŒæŽ’列内容。与其他一些产å“ä¸åŒï¼ŒFeed排åçš„å¦ä¹ æ–¹å¼åˆ†ä¸¤æ¥è¿›è¡Œï¼šç¦»çº¿æ¥éª¤æ˜¯åœ¨CPU / GPU上è®ç»ƒæœ€ä½³æ¨¡åž‹ï¼Œåœ¨çº¿æ¥éª¤åˆ™æ˜¯åœ¨CPU上进行的æŒç»åœ¨çº¿è®ç»ƒã€‚陈旧的消æ¯æŽ¨é€æ¨¡å¼å¯¹æ¶ˆæ¯è´¨é‡æœ‰ç€å¯é‡åŒ–çš„å½±å“。消æ¯æŽ¨é€å›¢é˜Ÿä¸æ–在他们的排åæ¨¡åž‹ä¸Šè¿›è¡Œåˆ›æ–°ï¼Œå¹¶è®©æ¨¡åž‹æ¨¡æ‹Ÿè‡ªèº«ï¼Œè¿›è¡Œå‡ å°æ—¶çš„ä¸é—´æ–çš„è®ç»ƒï¼Œä»¥æ¤æ¥æŽ¨è¿›æ¨¡åž‹çš„è¿›æ¥ã€‚而如果数æ®ä¸å¿ƒç¦»çº¿ä¸€ä¸ªæ˜ŸæœŸï¼Œå¸¦æ¥çš„è®ç»ƒè¿‡ç¨‹çš„æŸå¤±è®¡ç®—å°±å¯èƒ½ä¼šé˜»ç¢å›¢é˜ŸæŽ¢ç´¢æ–°çš„能力模型和新的å‚数的进度。

广告:最令人惊讶的是频ç¹çš„广告排å模å¼çš„è®ç»ƒçš„é‡è¦æ€§ã€‚寻找和展示åˆé€‚的广告æžå…¶ä¾èµ–机器å¦ä¹ åŠå…¶åˆ›æ–°ã€‚为了强调这ç§ä¾èµ–çš„é‡è¦æ€§ï¼Œæˆ‘们了解到,利用过时的机器模型的影å“是以å°æ—¶ä¸ºå•ä½æ¥è¡¡é‡çš„。 æ¢å¥è¯è¯´ï¼Œä½¿ç”¨ä¸€ä¸ªæ—§çš„模型比使用一个仅è®ç»ƒä¸€ä¸ªå°æ—¶çš„模型è¦ç³Ÿç³•å¾—多。

总的æ¥è¯´ï¼Œæˆ‘们的调查强调了机器å¦ä¹ è®ç»ƒå¯¹äºŽè®¸å¤šFacebook产å“与æœåŠ¡çš„é‡è¦æ€§ã€‚在日益增长的工作负è·é¢å‰ï¼Œå®¹ç¾å·¥ä½œä¸åº”该被低估。

容ç¾æž¶æž„支æŒï¼šä¸Šå›¾å±•ç¤ºäº†Facebookæ•°æ®ä¸å¿ƒçš„基础架构在全çƒçš„分布情况。如果我们关注在è®ç»ƒå’ŒæŽ¨ç†è¿‡ç¨‹ä¸CPU资æºçš„å¯ç”¨æ€§ï¼Œé‚£ä¹ˆæˆ‘们将有充足的计算适应能力æ¥åº”å¯¹å‡ ä¹Žæ¯ä¸ªåœ°åŒºçš„æœåŠ¡å™¨çš„æŸå¤±éœ€æ±‚。为GPU资æºæ供平ç‰å†—余的é‡è¦æ€§æœ€åˆè¢«ä½Žä¼°äº†ã€‚然而,利用GPU进行è®ç»ƒçš„åˆå§‹å·¥ä½œé‡ä¸»è¦æ˜¯è®¡ç®—机视觉应用程åºå’Œè®ç»ƒè¿™äº›æ¨¡åž‹æ‰€éœ€çš„æ•°æ®ï¼Œè¿™äº›è®ç»ƒæ•°æ®åœ¨å…¨çƒèŒƒå›´å†…都能被å¤åˆ¶å¾—到。当GPUs刚开始被部署到Facebook的基础设施ä¸æ—¶ï¼Œå¯¹å•ä¸€åŒºåŸŸè¿›è¡Œå¯ç®¡ç†æ€§æ“作似乎是明智的选择,直到设计æˆç†Ÿï¼Œæˆ‘们都å¯ä»¥åŸºäºŽä»–们的æœåŠ¡å’Œç»´æŠ¤è¦æ±‚æ¥å»ºç«‹å†…éƒ¨çš„ä¸“ä¸šçŸ¥è¯†ã€‚è¿™ä¸¤ä¸ªå› ç´ çš„ç»“æžœå°±æ˜¯æˆ‘ä»¬å°†æ‰€æœ‰ç”Ÿäº§GPU物ç†éš”离到了å¦ä¸€ä¸ªæ•°æ®ä¸å¿ƒåŒºåŸŸã€‚

然而,在那之åŽä¼šå‘ç”Ÿäº†å‡ ä¸ªå…³é”®çš„å˜åŒ–。由于越æ¥è¶Šå¤šçš„产å“采用深度å¦ä¹ 技术,包括排å,推è和内容ç†è§£ç‰ï¼ŒGPU计算和大数æ®çš„é‡è¦æ€§å°†å¢žåŠ 。æ¤å¤–,计算数æ®æ‰˜ç®¡å¹¶å¤æ‚化是æœå‘一个巨型区域战略枢纽的å˜å‚¨æ–¹å¼ã€‚大型地区的概念æ„味ç€å°‘æ•°æ•°æ®ä¸å¿ƒåœ°åŒºå°†å®¹çº³Facebook的大部分数æ®ã€‚顺便说一下,该地区所有的GPU群并没有驻留在大型å˜å‚¨åŒºåŸŸã€‚

å› æ¤ï¼Œé™¤äº†å…±åŒå®šä½æ•°æ®è®¡ç®—çš„é‡è¦æ€§ä¹‹å¤–,æ€è€ƒä»€ä¹ˆå¯èƒ½æƒ…况将使我们完全失去了æè½½GPU的区域,就å˜å¾—尤为é‡è¦ã€‚而这个考虑的结果驱使我们为机器å¦ä¹ è®ç»ƒéƒ¨ç½²å¤šæ ·åŒ–GPU的物ç†ä½ç½®ã€‚

未æ¥çš„设计方å‘:硬件ã€è½¯ä»¶å’Œç®—法

éšç€æ¨¡åž‹å¤æ‚度和数æ®é›†è§„模的增长,机器å¦ä¹ 的计算需求也éšä¹‹å¢žåŠ 。 机器å¦ä¹ 工作负载åæ˜ äº†è®¸å¤šå½±å“硬件选择的算法和数值的属性。

我们知é“,å·ç§¯å’Œä¸ç‰å°ºå¯¸çš„矩阵乘法是深度å¦ä¹ å‰é¦ˆå’ŒåŽå‘è¿‡ç¨‹çš„å…³é”®è®¡ç®—å†…æ ¸ã€‚åœ¨æ‰¹å¤„ç†é‡è¾ƒå¤§çš„情况下,æ¯ä¸ªå‚æ•°æƒé‡éƒ½ä¼šè¢«æ›´ç»å¸¸åœ°é‡ç”¨ï¼Œå› æ¤å¿…é¡»è¿›è¡Œè¿™äº›å†…æ ¸åœ¨ç®—æœ¯å¼ºåº¦ï¼ˆæ¯ä¸ªè¢«è®¿é—®å†…å˜å—节的计算æ“作次数)方é¢çš„改进。æ高算术强度通常会æé«˜åº•å±‚ç¡¬ä»¶çš„æ•ˆçŽ‡ï¼Œå› æ¤åœ¨å»¶è¿Ÿçš„é™åˆ¶ä¹‹å†…,以更大的数æ®æ‰¹é‡è¿è¡Œæ˜¯å¯å–çš„åšæ³•ã€‚计算机器å¦ä¹ 负载的上下é™å°†æœ‰åŠ©äºŽæ›´å®½çš„SIMDå•å…ƒçš„使用,åŠä¸“门的å·ç§¯æˆ–矩阵乘法引擎ã€ä¸“门的å处ç†å™¨çš„部署。

在æŸäº›æƒ…况下,对æ¯ä¸ªèŠ‚点使用å°æ‰¹é‡æ•°æ®ï¼Œåœ¨å¹¶å‘查询低的实时推ç†ä¸æˆ–在è®ç»ƒè¿‡ç¨‹ä¸æ‰©å±•åˆ°å¤§é‡çš„节点的情况,也是必需的。å°çš„batch规模通常会有较低的算术强度(例如,全连接层ä¸çŸ©é˜µçš„矢é‡ä¹˜æ³•è¿ç®—,本质上是有带宽é™åˆ¶çš„)。当完整模型ä¸é€‚åˆç‰‡ä¸ŠSRAMå•å…ƒæˆ–最åŽä¸€çº§ç¼“å˜æ—¶ï¼Œè¿™ç§small batchæ•°æ®å¯èƒ½ä¼šé™ä½Žå‡ 个常è§æƒ…况的性能。

这个问题å¯ä»¥é€šè¿‡æ¨¡åž‹åŽ‹ç¼©ï¼Œé‡åŒ–和高带宽内å˜æ¥ç¼“解。模型压缩å¯ä»¥é€šè¿‡å’Œ/或é‡åŒ–稀ç–æ¥å®žçŽ°ã€‚è®ç»ƒæœŸé—´çš„稀ç–修剪连接(Sparsification prunes connections)会导致一个更å°çš„模型。é‡åŒ–ä½¿ç”¨å®šç‚¹æ•´æ•°æˆ–æ›´çª„çš„æµ®ç‚¹æ ¼å¼æ¥åŽ‹ç¼©æ¨¡åž‹ï¼Œè€Œä¸æ˜¯ç”¨äºŽåŠ æƒå’Œæ¿€æ´»çš„FP32(å•ç²¾åº¦æµ®ç‚¹ï¼‰ã€‚ ç›®å‰å·²ç»æœ‰ä¸€äº›ä½¿ç”¨8ä½æˆ–16ä½çš„常用网络,被è¯æ˜Žäº†ç›¸å½“的准确性。还有一些æ£åœ¨è¿›è¡Œçš„ç ”ç©¶å·¥ä½œæ˜¯ä½¿ç”¨1或2ä½å¯¹æ¨¡åž‹æƒé‡è¿›è¡ŒåŽ‹ç¼©ã€‚除了å‡å°‘内å˜å 用é‡å¤–,修剪和é‡åŒ–还å¯ä»¥é€šè¿‡é™ä½Žå¸¦å®½æ¥åŠ 速底层硬件,并且å…许硬件架构在使用固定点数进行æ“作时具有更高的计算速率,这比è¿è¡ŒFP32值更有效。

缩çŸè®ç»ƒæ—¶é—´ï¼ŒåŠ å¿«æ¨¡åž‹ä¼ é€’éœ€è¦åˆ†å¸ƒå¼è®ç»ƒã€‚æ£å¦‚在第四节Bä¸è®¨è®ºçš„é‚£æ ·ï¼Œåˆ†å¸ƒå¼è®ç»ƒéœ€è¦å¯¹ç½‘络拓扑结构和调度进行仔细的ååŒè®¾è®¡ï¼Œä»¥æœ‰æ•ˆåˆ©ç”¨ç¡¬ä»¶å¹¶å®žçŽ°è‰¯å¥½çš„è®ç»ƒå’Œè´¨é‡ã€‚æ£å¦‚第III部分B节ä¸æ述的,分布å¼è®ç»ƒä¸æœ€å¹¿æ³›ä½¿ç”¨çš„并行性形å¼æ˜¯æ•°æ®å¹¶è¡Œæ€§ï¼Œï¼Œè¿™éœ€è¦åŒæ¥æ‰€æœ‰èŠ‚点的梯度下é™ï¼Œè¦ä¹ˆåŒæ¥è¦ä¹ˆå¼‚æ¥ã€‚åŒæ¥SGD需è¦å…¨éƒ¨å‡å°‘æ“作(all-reduce operation)。 å½“ä½¿ç”¨é€’å½’åŠ å€ï¼ˆå’Œå‡åŠï¼‰æ‰§è¡Œæ—¶ï¼Œå…¨éƒ¨å‡å°‘æ“作呈现出æ¥çš„一个有趣的属性是带宽需求éšç€é€’归级别呈指数级下é™ã€‚

这鼓励了我们进行分层系统的设计,其ä¸çš„节点在层次结构的底部形æˆè¶…节点连接(例如,通过高带宽点实现点到点的连接或高ä½å¼€å…³ï¼‰ï¼›åœ¨å±‚次结构的顶部,超节点通过较慢的网络(例如,以太网)连接。æ¢å¥è¯è¯´ï¼Œå¼‚æ¥SGD(ä¸ç‰å¾…其他节点处ç†æ‰¹å¤„ç†ï¼‰æ›´éš¾ï¼Œé€šå¸¸éœ€è¦é€šè¿‡å…±äº«å‚æ•°æœåŠ¡å™¨å®Œæˆ; 节点将其更新å‘é€åˆ°å‚æ•°æœåŠ¡å™¨ï¼Œè¯¥æœåŠ¡å™¨èšåˆå¹¶å°†å…¶åˆ†å‘回节点。为å‡å°‘更新的陈旧程度并å‡è½»å‚æ•°æœåŠ¡å™¨çš„压力,混åˆè®¾è®¡å¯èƒ½æ˜¯ä¸ªå¥½çš„æ€è·¯ã€‚åœ¨è¿™æ ·çš„ä¸€ä¸ªè®¾è®¡ä¸ï¼Œå¼‚æ¥æ›´æ–°å‘生在超节点内具有高带宽和低延迟连接性的本地节点,而åŒæ¥æ›´æ–°å‘生在超节点之间。进一æ¥å¢žåŠ 扩展性需è¦å¢žåŠ 批é‡çš„大å°è€Œä¸æ˜¯ä»¥ç‰ºç‰²æ”¶æ•›æ€§ä¸ºä»£ä»·ã€‚ä¸ç®¡æ˜¯åœ¨Facebookå†…éƒ¨è¿˜æ˜¯å¤–éƒ¨ï¼Œè¿™éƒ½æ˜¯ä¸€ä¸ªå¾ˆæ´»è·ƒçš„ç®—æ³•ç ”ç©¶é¢†åŸŸã€‚

æ£å¦‚第II部分所æ述的,在Facebook上我们的使命是为那些基于机器å¦ä¹ 的应用程åºæž„建高性能,节能的机器å¦ä¹ 系统。我们æ£åœ¨ä¸æ–评估和构建新颖的硬件解决方案,并ä¿æŒç®—法的å˜åŒ–åŠå…¶å¯¹ç³»ç»Ÿæ½œåœ¨å½±å“的关注。

in conclusion

基于机器å¦ä¹ 的工作负载é‡è¦æ€§çš„å¢žåŠ æ£åœ¨å½±å“åˆ°ç³»ç»Ÿå †æ ˆçš„æ‰€æœ‰éƒ¨åˆ†ã€‚å¯¹æ¤ï¼Œè®¡ç®—机体系结构社区如何åšå‡ºæœ€å¥½çš„应对ç–略以åŠç”±æ¤äº§ç”Ÿçš„挑战将æˆä¸ºå¤§å®¶è¶Šæ¥è¶Šå…³æ³¨çš„一个è¯é¢˜ã€‚虽然以å‰æˆ‘们已ç»å›´ç»•æœ‰æ•ˆåœ°å¤„ç†MLè®ç»ƒå’ŒæŽ¨ç†çš„å¿…è¦è®¡ç®—进行了大é‡å·¥ä½œï¼Œä½†æ˜¯è€ƒè™‘到解决方案在应用到大规模数æ®æ—¶å°†å‡ºçŽ°æŒ‘战,情况就会å‘生改å˜ã€‚

在Facebook,我们å‘çŽ°äº†å‡ ä¸ªå…³é”®å› ç´ ï¼Œè¿™äº›å› ç´ åœ¨è§„æ¨¡åŒ–ä»¥åŠé©±åŠ¨æ•°æ®ä¸å¿ƒåŸºç¡€æž¶æž„的设计决ç–æ—¶éžå¸¸é‡è¦ï¼šæ•°æ®ä¸Žè®¡ç®—机ååŒå®šä½çš„é‡è¦æ€§ï¼Œå¤„ç†å„ç§æœºå™¨å¦ä¹ 工作负载(ä¸ä»…仅是计算机视觉)的é‡è¦æ€§ï¼Œç”±éžé«˜å³°æœŸçš„æ¯æ—¥å¾ªçŽ¯äº§ç”Ÿçš„剩余容é‡è€Œå¸¦æ¥çš„机会。我们在设计包å«å®šåˆ¶è®¾è®¡çš„,易于使用的开æºç¡¬ä»¶çš„端到端解决方案,以åŠå¹³è¡¡æ€§èƒ½å’Œå¯ç”¨æ€§çš„å¼€æºè½¯ä»¶ç”Ÿæ€ç³»ç»Ÿæ—¶ï¼Œè€ƒè™‘了上述æ¯ä¸€ä¸ªå› ç´ ã€‚è¿™äº›è§£å†³æ–¹æ¡ˆçŽ°åœ¨æ£åœ¨æœåŠ¡è¶…过21亿人的大规模机器å¦ä¹ 工作负载æ供强大的动力,åŒæ—¶ä¹Ÿä½“现了相关专家在跨越机器å¦ä¹ 算法和系统设计方é¢æ‰€ä»˜å‡ºçš„努力。

New energy Cable Assembly is divided into new energy vehicle wiring harness ( Electric Vehicle Wiring Harness ) and Energy Storage Wire Harness. Energy refers to the energy that has just begun to be developed and utilized or is being actively researched and yet to be promoted, such as solar energy, geothermal energy, wind energy, ocean energy, biomass energy and nuclear fusion energy. With the decreasing of energy, the use of new energy is the general trend. The new energy industry is a very potential industry in terms of the current situation, and the demand for new energy wiring harnesses will also increase sharply in the future.

Kable-X has passed ISO9001 quality system certification, UL certification, ISO13485 medical quality system certification, ISO/TS16949 automotive quality system certification, etc. How to implement these system certifications into the actual production process? Harness product quality control involves design, Production, procurement, quality and other departments must create a team quality culture. Product quality is the life of the company. Through meetings, problem discussions and other forms, employees are constantly instilling quality awareness. We have weekly meetings on Mondays, morning meetings for production line teams, and customers. Regular product meetings and so on. Only through continuous learning, improve the quality awareness of team members, and improve the execution of the entire team, can we continuously improve the level of quality control. There is no end to quality control, there is no best, only better.

Only through strict waterproof wiring harness design, purchase of waterproof wiring harness produced by regular manufacturers, production in strict accordance with waterproof wiring harness technology, and rigorous and thorough quality inspections, can we produce qualified defensive wiring harness.

We produce a large number of Renewable Energy Cable Assembly, among which BMS Sampling Wire Harness and Energy Storage Wire Harness are loved by many customers. In addition, we also produce Medical Cable Assembly and Renewable Energy Cable Assy.

New Energy Cable Assembly,New Energy Cable Assy,New Energy Cable Harness Assembly,New Energy Medical Cable Assemblies

Kable-X Technology (Suzhou) Co., Ltd , https://www.kable-x-tech.com