▌ Feature Pyramid Networks

First, we are going to introduce the famous feature pyramid network ( this is a paper published on CVPR 2017, hereinafter referred to as FPN).

If you have been following the latest developments in computer vision in the past two years, then you must have heard of the name of this network and wait for the author to open source this project like everyone else. FPN This paper presents a very good idea. We all know that it is very difficult to build a baseline model of multi-tasking, multi-subject, and multi-application areas.

FPN can be seen as an extended universal feature extraction network (such as ResNet, DenseNet), you can choose the pre-trained FPN model you want from the deep learning model library and use it directly!

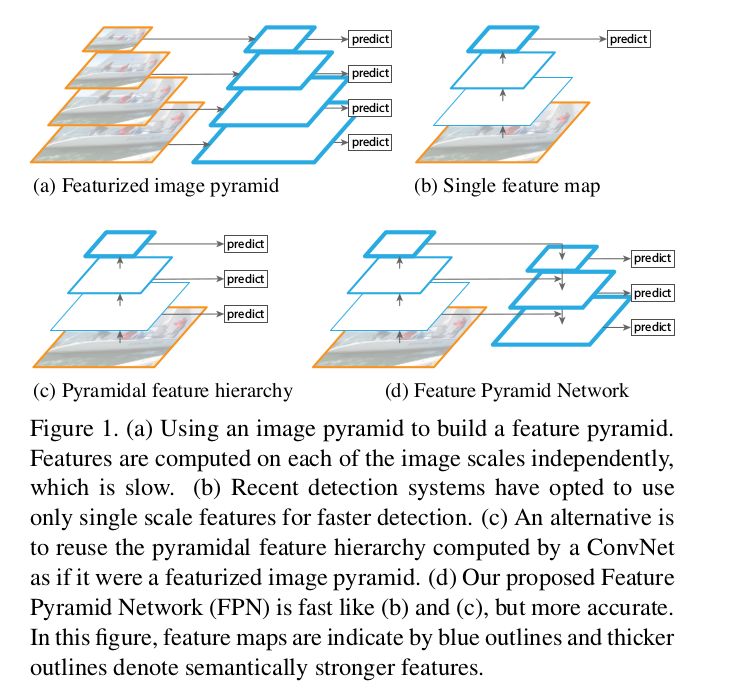

Typically, image objects have multiple different sizes and sizes. The general data set cannot capture all the image attributes, so people use image pyramids to downgrade the image at multiple resolutions and extract image features to facilitate CNN processing. However, the biggest drawback of this method is that the network processing speed is very slow, so we prefer to use a single image scale for prediction, which leads to the loss of a large number of image features, such as some researchers may obtain prediction results from the middle layer of the feature space.

In other words, with ResNet as an example, for a classification task, a deconvolution layer is placed after several ResNet modules, and the split output is obtained with auxiliary information and auxiliary losses (probably 1x1 convolution and GlobalPool) This is the workflow for most of the existing model architectures.

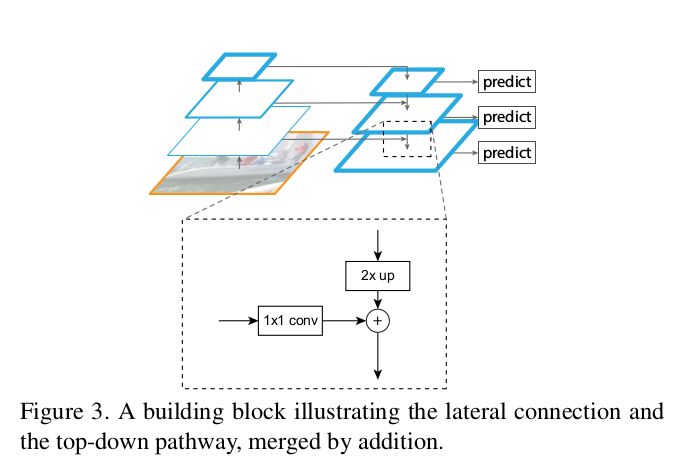

Back to our theme, the FPN authors propose a novel idea that can effectively improve existing processing methods. They don't just use side-by-side connections, they also use top-down paths, and combine them with a simple MergeLayer (mode=『additionã€), which is very effective for feature handling! Since the semantic information of the underlying feature map (initial convolutional layer) extracted by the initial convolutional layer is not strong enough to be directly used for classification tasks, and the semantic information of deep feature maps is stronger, FPN uses this key point from More semantic information is captured in the deep feature map.

In addition, FPN obtains the Fmaps of the image through the top-down connection path, so that it can reach the deepest layer of the network. It can be said that FPN skillfully combines the two, this network structure can extract the deeper feature semantic information of the image, thus avoiding the loss of the existing processing information.

Some other implementation details

Image pyramid : All feature maps of the same size are considered to belong to the same stage. The output of the last layer is the reference FMaps of the pyramid. Such as the output of the 2nd, 3rd, 4th, and 5th modules in ResNet. You can change the pyramid based on memory and specific usage.

Lateral connections : Both 1x1 convolution and top-down paths go through a 2× upsampling process. The features of the upper layer generate coarse-grained image features in a top-down manner, while the lateral connections add more fine-grained feature information through a bottom-up path . Here I cite some of the pictures in the paper to help you understand the process further.

In the FPN paper, the author also introduced a simple demo to visualize the design of the idea.

As mentioned earlier, FPN is a baseline model that can be used in multitasking scenarios for areas such as target detection, segmentation, pose estimation, face detection, and other computer vision applications. The title of this paper is FPNs for Object Detection, which has been cited more than 100 times since its publication in 2017!

In addition, the authors of the paper used FPN as the baseline model of the network in the subsequent RPN (Regional Advice Network) and Faster-RCNN network studies, showing the power of FPN. Below I will list some key experimental details, which can also be found in the paper.

Experimental points

RPN: In this paper, the author uses FPN instead of a single-scale Fmap and uses a single-scale anchor at each level (due to the use of FPN, there is no need to use multi-scale anchors). In addition, the authors show that all levels of feature pyramids share similar semantic information.

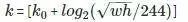

Faster RCNN: In this paper, the author processes the feature pyramid using an output similar to the image pyramid and assigns the region of interest (RoI) to a specific hierarchy using the following formula.

Wherein w, h respectively represent the width and height, k is assigned to the level RoI, kO represents w = 224, h = 224 when the level is mapped.

Wherein w, h respectively represent the width and height, k is assigned to the level RoI, kO represents w = 224, h = 224 when the level is mapped.

Faster RCNN achieves the most advanced experimental results on the COCO dataset without any redundant structure.

The authors of the paper conducted ablation studies on the function of each module and demonstrated the ideas presented in this paper.

In addition, based on the DeepMask and SharpMask papers, the author further demonstrates how to use the FPN to generate a segmentation proposal generation.

For other implementation details, experimental settings, etc., interested students can read this paper carefully.

Implementation code

The official Caffe2 version:

https://github.com/facebookresearch/Detectron/tree/master/configs/12_2017_baselines

Caffe version: https://github.com/unsky/FPN

PyTorch version: https://github.com/kuangliu/pytorch-fpn (just the network)

MXNet version: https://github.com/unsky/FPN-mxnet

Tensorflow version: https://github.com/yangxue0827/FPN_Tensorflow

▌ RetinaNet: Focal Loss loss function for target detection intensive tasks

This architecture was developed by the same team. This paper [2] was published on ICCV 2017, and the work of the paper is also a work of the FPN paper. Two key ideas are proposed in this paper: the general loss function Focal Loss (FL) and the single-stage target detector RetinaNet. The combination of the two, RetinaNet, performed very well in COCO's target detection mission and exceeded the results maintained by previous FPN.

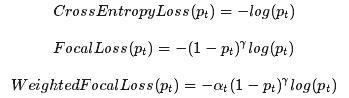

Focal Loss

The Focal Loss loss function comes from a clever and simple idea. If you are familiar with weighting functions, then you should be familiar with Focal Loss. The loss function is a subtle use of the weighted loss function, which makes the model training process more focused on the classification of difficult samples. Its mathematical formula is as follows:

Where γ is a changeable hyperparameter and pt is the sample probability of the classifier output. Setting γ to greater than 0 will reduce the sample weights with better classification results. __t represents the class weight in the standard weighted loss function, which is referred to in the paper as the α-balanced loss. It is worth noting that this is a classification loss that RetinaNet combines with the loss of smooth L1 for target detection tasks.

RetinaNet

YOLO2 and SSD are the best one-stage algorithms for the current processing target scene. Successively, FAIR has also developed its own single-stage detector. The authors point out that neither the YOLO2 nor the SSD models are close to the current best results, and RetinaNet can easily achieve the best results in a single stage, and at a faster rate, they attribute this to the new loss function (Focal Loss) application. Instead of a simple network structure (the structure is still based on FPN).

The authors believe that single-stage detectors will face many background and imbalanced sample sizes (not just simple imbalances in positive category samples). The general weighted loss function is only to resolve the imbalance in sample size. The problem, and the Focal Loss function is mainly for the difficult/small sample classification, and this is a good fit for RetinaNet.

be careful:

The two-stage target detector does not have to worry about the imbalance of positive and negative samples, because most of the unbalanced samples are removed in the first phase.

RetinaNet consists of two parts: a backbone network (ie, a convolution feature extractor such as FPN) and two sub-networks of specific tasks (classifiers and bounding box regenerators).

When different design parameters are used, the performance of the network does not change much.

Anchor or AnchorBoxes are the same Anchor[5] as in RPN. The coordinates of Anchor are the center position of the sliding window. Its size and aspect ratio are related to the aspect ratio of the sliding window. The size ranges from 322 to 512, and the aspect ratio is {1:2, 1:1. 2:1}.

The FPN is used to extract image features, and at each stage there is a cls+bbox subnetwork that gives the corresponding output for all locations in the Anchor.

Implementation code

The official Caffe2 version:

https://github.com/facebookresearch/Detectron/tree/master/configs/12_2017_baselines

PyTorch version: https://github.com/kuangliu/pytorch-retinanet

Keras version: https://github.com/fizyr/keras-retinanet

MXNet version: https://github.com/unsky/RetinaNet

▌ Mask R-CNN

As mentioned above, Mask R-CNN [3] was developed by almost the same team and was published on ICCV 2017 for instance segmentation of images. In simple terms, the instance segmentation of an image is simply a target detection task that does not use a bounding box. The purpose is to give an accurate segmentation mask for the detection target. This task is simple and difficult to implement, but to make the model work properly and achieve the current best level, or to use pre-trained models to speed up the implementation of the split task, etc. easy.

TL;DR: If you understand how Faster R-CNN works, then the Mask R-CNN model is very simple for you, just add a network branch for segmentation based on Faster R-CNN. The network body has three branches that correspond to three different tasks: classification, bounding box regression, and instance segmentation.

It is worth noting that the biggest contribution of Mask R-CNN is that it can achieve the best instance segmentation effect with simple and basic network design without complicated training optimization process and parameter setting. Operating efficiency.

I really like this paper because its idea is simple. However, those seemingly simple things are accompanied by a lot of explanations. For example, the use of polynomial masks and independent masks (softmax vs sigmoid).

In addition, Mask R-CNN does not assume a large amount of prior knowledge, so there is no need for argument in the paper. If you are interested, you can take a closer look at this paper and you may find some interesting details. Based on your basic understanding of Faster RCNN, I have summarized the following details to help you further understand Mask R-CNN:

First, Mask R-CNN is similar to Faster RCNN and is a two-stage network. The first phase is the RPN network.

Mask R-CNN adds a parallel split branch, which is used to predict the split mask, called FCN.

The loss function of Mask R-CNN consists of four parts: L_cls, L_box, L_maskLcls, L_box, and L_mask.

Replace the ROIPool with the ROIAlign layer in Mask R-CNN. This is not the same as ROIPool, which rounds the fractional part of your calculation (x/spatial_scale) to an integer, but uses bilinear interpolation to find the pixel corresponding to a particular floating point value.

For example: Assume that the ROI height and width are 54, 167, respectively. The spatial scale, also known as stride, is the value of the image size size/Fmap (H/h), which is typically 224/14=16 (H=224, h=14). Also, note that:

ROIPool: 54/16, 167/16 = 3,10

ROIAlign: 54/16, 167/16 = 3.375, 10.4375

Now we use a bilinear interpolation method to upsample it.

Depending on the shape of the ROIAlign output (eg 7x7), we can use a similar operation to split the corresponding area into sub-areas of the appropriate size.

Use Chainer folks to check the Python implementation of ROIPooling and try to implement ROIAlign yourself.

The implementation code for ROIAlign is available in a different library, see the code links given below.

The backbone network of Mask R-CNN is ResNet-FPN.

In addition, I have written an article about the principle of Mask-RCNN, the blog address is: https://coming.soon/.

Implementation code

The official Caffe2 version:

https://github.com/facebookresearch/Detectron/tree/master/configs/12_2017_baselines

Keras version: https://github.com/matterport/Mask_RCNN/

PyTorch version: https://github.com/soeaver/Pytorch_Mask_RCNN/

MXNet version: https://github.com/TuSimple/mx-maskrcnn

▌ Learning to Segment Everything

As the topic Learning to Segment Everything, this paper is about the goal segmentation task, specifically to solve the instance segmentation problem. Standard segmented datasets in the field of computer vision For real-world applications, the number of datasets is too limited. Even the most popular and richest COCO datasets [7] have only 80 target categories. Still far from being able to meet practical needs.

In contrast, data sets for target recognition and detection, such as OpenImages [8], have nearly 6,000 classification categories and 545 detection categories. In addition, Stanford's other dataset, the Visual Genome, has nearly 3,000 target categories. However, since the number of targets included in each category in this data set is too small, even if its category is richer and more useful in practical applications, deep neural networks cannot achieve sufficient performance on such data sets, so researchers It is generally not desirable to use these data sets for target classification and detection of problems. It is worth noting that this data set has only 3000 target detection (boundary box) label categories, and does not contain any target segmentation labels, that is, it cannot be directly used for target segmentation research.

Let's introduce the paper we are going to talk about [4].

As far as the data set is concerned, there is actually not much difference between the bounding box and the splitting label. The only difference is that the latter is more accurate than the labeling information of the former. Therefore, the author of this paper uses the target boundary box label of 3000 categories in the Visual Genome [9] data set to solve the target segmentation task. We call this approach a weakly supervised learning, that is, complete monitoring information for related tasks is not required. If they are using a COCO + Visual Genome dataset that uses both split and bounding box labels, then this can also be called semi-supervised learning.

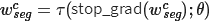

Let's go back to the topic. This paper presents a very good idea. The network architecture is mainly as follows:

The network structure is based on Mask-RCNN.

The model is trained using both masked and unmasked inputs.

A weight transfer function is added between the split mask and the bounding box mask.

When using an unmasked input, it will

As shown in the following figure: A represents the COCO data set, and B represents the Visual Genome data set, using different training paths for different inputs of the network.

Passing back the two losses simultaneously will result in

Fix: When the backpropagation mask is lost, calculate the weight of the prediction mask τ about the gradient value of the weight transfer function parameter θ, and the weight of the bounding box

, where Ï„ represents the weight value of the prediction mask.

, where Ï„ represents the weight value of the prediction mask.

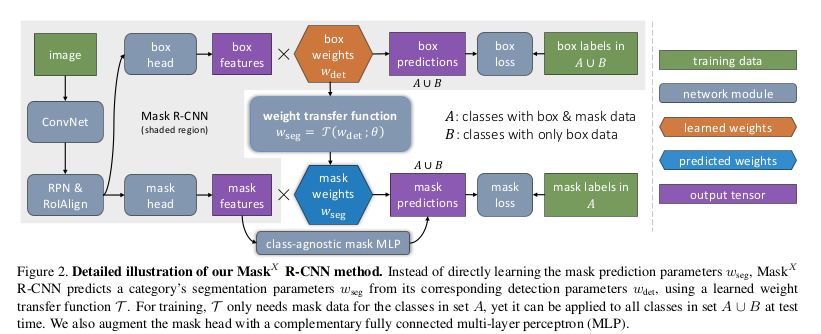

Since the Visual Genome dataset does not have a segmentation annotation, the model cannot give the accuracy of the target segmentation on that dataset, so the author displays the validation results of the model on other datasets. The PASCAL-VOC dataset has 20 target categories, all of which are included in the COCO data set. Therefore, for these 20 categories, they used the segmentation annotations of the PASCAL-VOC dataset and the bounding box labels of the corresponding categories in the COCO dataset to train the model.

The paper presents the results of model segmentation in these 20 categories in the COCO dataset. In addition, since the two data sets contain two different real tags, they also trained the opposite situation. The experimental results are shown in the figure below.

Jiangsu Stark New Energy Co.,ltd was founded in 2018. It is an emerging new energy manufacturer and trader. We produce high-quality lithium iron phosphate battery packs, including 12.8V100AH 25.6V100AH 51.2V50AH 100AH 200AH, etc., also 192V 384V and other high-voltage lithium batteries for Energy Storage System, as well as customized container energy storage system, the battery is currently compatible with inverters of all mainstream brands

Matching communications, such as Growatt, Goodwe, Voltronic, Victron, SMA, Sungrow, Kehua, etc. The company is committed to the production,R&D and sales of lithium batteries and energy storage systems, aiming to provide customers with more cost-effective and more durable products , Our current lithium battery products

Mainly used in home solar power system, commercial energy storage system, uninterruptible power supply, etc., which are welcomed by overseas customers.

Battery Lithium Ion,Lithium Ion Battery 24V 100Ah,48V100Ah Lithium Ion Battery,Lithium Iron Phosphate Battery

Jiangsu Stark New Energy Co.,Ltd , https://www.stark-newenergy.com